Read Online | Sign Up | Advertise | Upgrade

Good morning, AI enthusiasts! Google DeepMind just released five years of behind-the-scenes footage showing the AlphaFold team cracking protein folding and winning a Nobel Prize.

The documentary captures the exact moment researchers realized they had solved biology's 50-year grand challenge, proving AI can move from mastering games to delivering real scientific breakthroughs.

In today's recap:

DeepMind releases five-year Nobel Prize documentary

Anthropic proves AI cuts work time by 80%

Perplexity launches AI shopping with PayPal

Warner Music partners with Suno for licensed AI music

New AI tools & prompts

GOOGLE DEEPMIND

Five years inside the AlphaFold lab

Recaply: Google DeepMind just released The Thinking Game, a feature-length documentary filmed over five years that captures the AlphaFold team solving a 50-year-old protein folding problem that recently earned a Nobel Prize.

Key details:

The film follows founder Demis Hassabis and his team through major moments in their AI lab, including the exact moment researchers discovered they had cracked the grand challenge in biology.

Filmed by the same award-winning team behind the AlphaGo documentary, spanning five years of development from complex strategy games to biological breakthroughs.

The documentary premiered at Tribeca Festival before touring internationally, accumulating over 76,000 views in its first day of free release on YouTube.

Available for free on the Google DeepMind YouTube channel starting November 25, marking the fifth anniversary of AlphaFold's initial release.

Why it matters: After AlphaGo showed AI could master games, the big question was whether it could solve real scientific problems. This documentary captures the moment AI moved from entertainment to solving biology's hardest challenges. Google positions this as proof that AGI research delivers real-world breakthroughs, not just better chatbots, though the five-year timeline also reveals just how difficult these advances remain.

PRESENTED BY SYNTHFLOW

A Better Way to Deploy Voice AI at Scale

Most Voice AI deployments fail for the same reasons: unclear logic, limited testing tools, unpredictable latency, and no systematic way to improve after launch.

The BELL Framework solves this with a repeatable lifecycle — Build, Evaluate, Launch, Learn — built for enterprise-grade call environments.

See how leading teams are using BELL to deploy faster and operate with confidence.

ANTHROPIC

Real conversations reveal AI impact

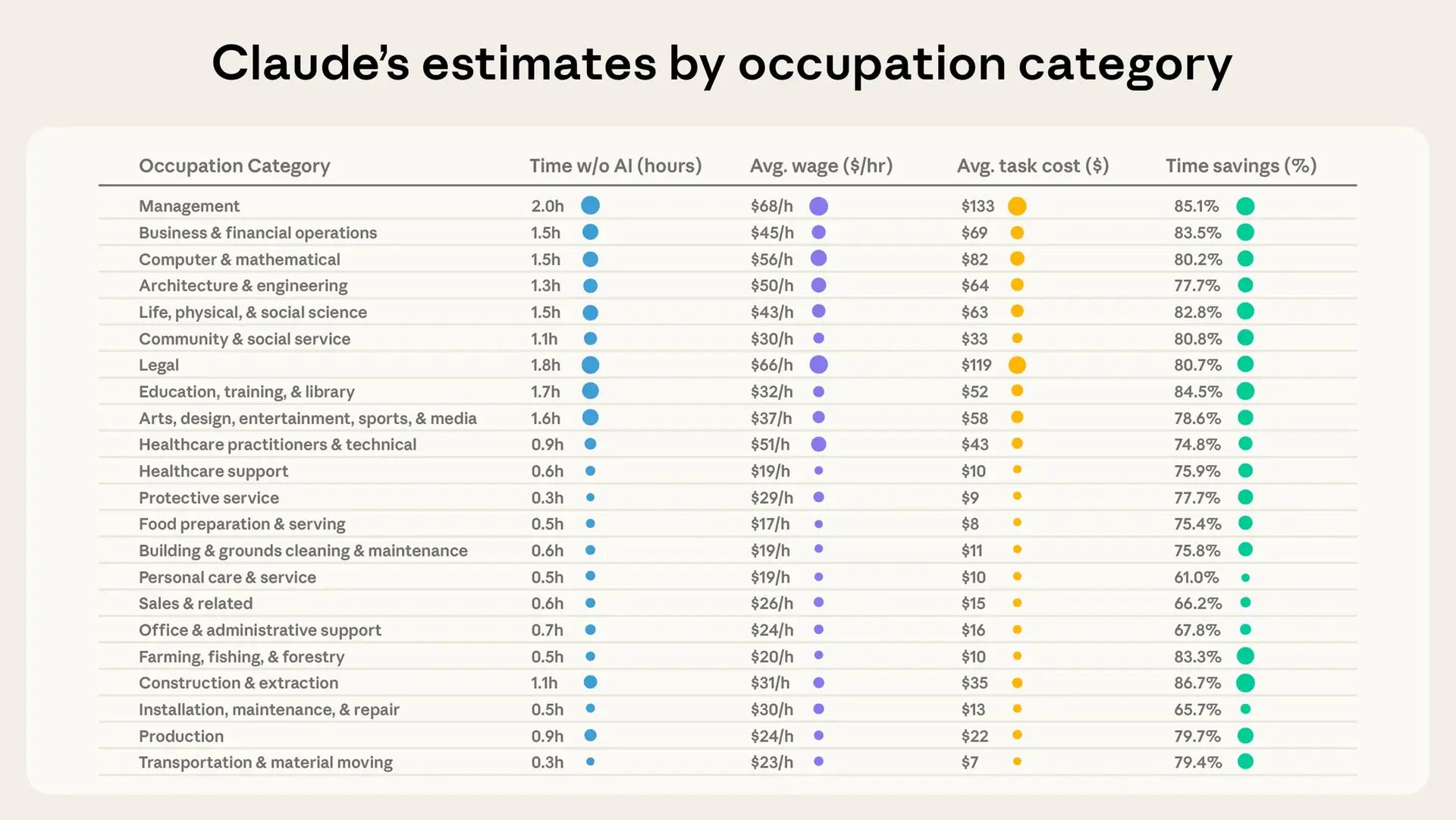

Recaply: Anthropic just released research using Claude to study 100,000 real user conversations, finding that tasks take humans 90 minutes on average without AI help while Claude cuts that time by roughly 80%.

Key details:

The privacy-preserving analysis method prompts Claude to estimate human completion time and actual AI-assisted time, then maps conversations to occupational tasks using the O*NET taxonomy for economic modeling.

Claude's time estimation accuracy achieved 0.44 correlation with actual tracked software development times compared to 0.50 for human developers, demonstrating directional reliability despite lacking full context.

Time savings vary dramatically by task type, from 95% reduction for compiling report information to just 20% for checking diagnostic images, suggesting uneven productivity effects.

The methodology tracks within-task variation over time, capturing whether users tackle more complex projects or achieve greater efficiency gains as models improve.

Why it matters: Past productivity studies relied on narrow lab experiments or coarse government statistics. Anthropic's method of analyzing real conversations at scale provides a middle ground, though Claude tends to overestimate short tasks and underestimate long ones. As models improve at time estimation, this approach could become increasingly valuable for tracking how AI reshapes actual work beyond controlled settings.

PERPLEXITY & PAYPAL

Your AI personal shopper is here

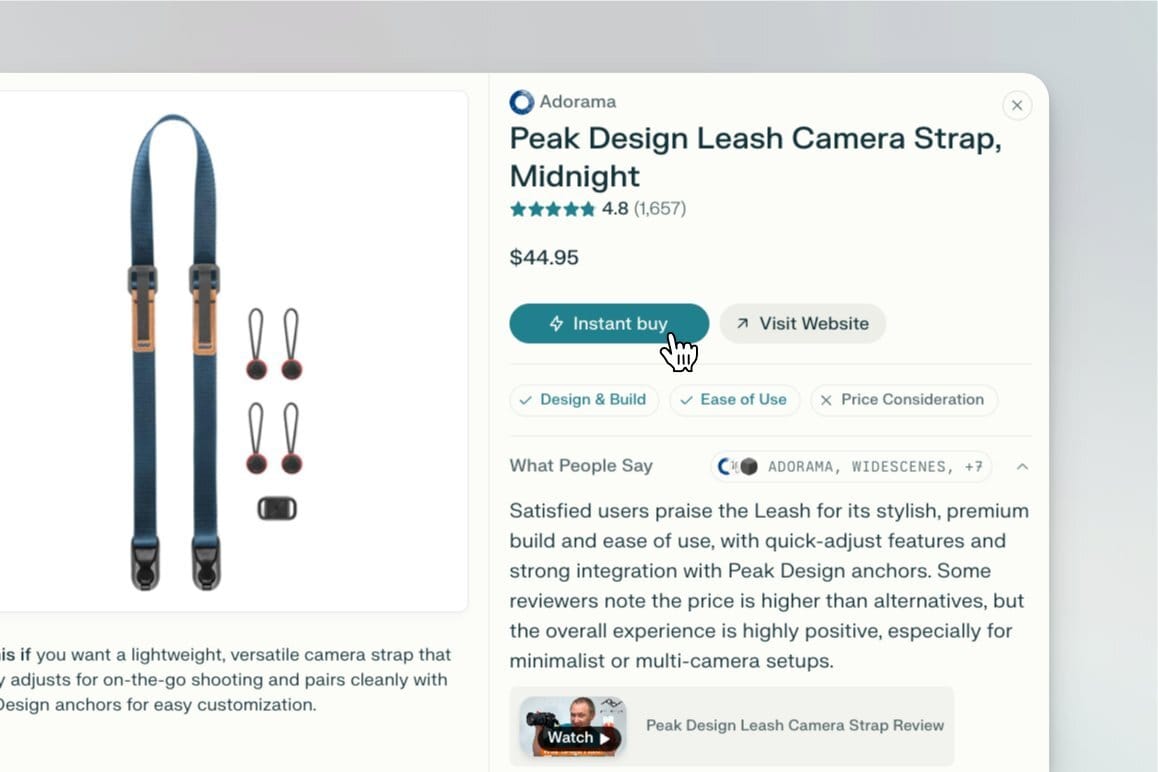

Recaply: Perplexity just made shopping personal with an AI assistant that learns your style from past searches and provides tailored product picks, with free PayPal instant checkout for US users.

Key details:

The shopping assistant remembers your preferences so when you ask about a desk lamp today, it finds options matching your mid-century modern style from previous searches. No need to explain your taste every time you shop.

Buyers get product cards showing what matters for their specific needs instead of scrolling endless grids. Ask conversational questions like finding gifts for a chef with a tiny kitchen and get curated results with reviews focused on your criteria.

Instant Buy through PayPal lets shoppers complete purchases in the same window and continue shopping without losing momentum. Retailers benefit from high-intent shoppers less likely to abandon carts between decision and purchase.

Free for all US users on desktop and web now, with iPhone and Android apps launching soon. Works in Deep Research for detailed product comparisons and guides.

Why it matters: Online shopping has become overwhelming with endless product options and comparison requirements. Perplexity positions this as solving decision fatigue by having AI handle discovery and maintain context across questions. The partnership with PayPal addresses both checkout friction for buyers and merchant concerns about owning customer relationships, though adoption will depend on whether conversational search truly delivers better product matches than current recommendation engines.

NEWS

What Matters in AI Right Now?

Warner Music settled its lawsuit with Suno and partnered to launch licensed AI music models in 2026, acquiring Songkick platform.

Amazon pushed engineers to use its Kiro coding tool over third-party options like Claude Code and Cursor, according to Reuters.

OpenAI integrated Voice Mode directly into ChatGPT's main interface, letting users talk and see real-time answers without separate mode.

Pusan National University researchers found humans and AI systems should share responsibility for AI-caused harm through distributed accountability models.

Siemens unveiled Engineering Copilot TIA that autonomously executes automation engineering tasks, now expanding to additional pilot customers after successful beta.

Black Forest Labs launched FLUX.2 image generation model supporting 10 reference images simultaneously, 4MP resolution, and complex typography rendering.

Google added interactive images to Gemini app letting users tap diagram parts for definitions and detailed explanations of academic concepts.

TOOLS

AI Tools to Check Out

🧠 MyLens: Instantly visualize content as mindmaps, flowcharts, and timelines.

✍️ Videotowords: Transcribe videos and audio to text with multi‑language support.

🏗️ PromeAI: Turn design sketches into realistic architectural renderings.

🤖 AI Desk: Always‑on AI support trained on your business data.

🎬 Arcads: Generate ad‑style videos with AI actors and visuals.

🌍 TransGull: Translate voice, text, images, and videos with AI.

🗣️ Talkpal: Practice speaking languages with AI conversation tutors.

✨ Sudowrite: An AI writing partner designed for fiction.

* Some links in this newsletter may be from sponsors or affiliates. We may get paid if you buy something through these links.

PROMPTS

Write Investor Update Summary

Write a summary for our next investor update. Use highlights from [insert performance report or fundraising update]. Format the output as a concise executive email suitable for external stakeholders.🧡 Enjoyed this issue?

🤝 Recommend our newsletter or leave a feedback.

How'd you like today's newsletter?

Cheers, Jason